Double binary search (w/ Jane Street's June 2021 challenge)

This was definitely the most difficult Jane Street puzzle I have solved so far. You can find solutions to previous Jane Street puzzles I’ve solved here and here.

The Robot Weightlifting World Championship’s final round is about to begin! Three robots, seeded 1, 2, and 3, remain in contention. They take turns from the 3rd seed to the 1st seed publicly declaring exactly how much weight (any nonnegative real number) they will attempt to lift, and no robot can choose exactly the same amount as a previous robot. Once the three weights have been announced, the robots attempt their lifts, and the robot that successfully lifts the most weight is the winner. If all robots fail, they just repeat the same lift amounts until at least one succeeds. Assume the following: 1) all the robots have the same probability p(w) of successfully lifting a given weight w; 2) p(w) is exactly known by all competitors, continuous, strictly decreasing as the w increases, p(0) = 1, and p(w) -> 0 as w -> infinity; and 3) all competitors want to maximize their chance of winning the RWWC. If w is the amount of weight the 3rd seed should request, find p(w). Give your answer to an accuracy of six decimal places.

Weights vs probabilities

While trying to model this problem mathematically, notice that the weights are not directly of importance: only is. is strictly decreasing and continuous, therefore bijective. It also holds that . Therefore, we can work directly with the probabilities of succesful lifts, and reason about the ordering of weights from this probabilities. With this simple rework we simplify the problem massively. Let’s denote the probabilities of succesful lifts by , and . Robot #3 starts the game by choosing .

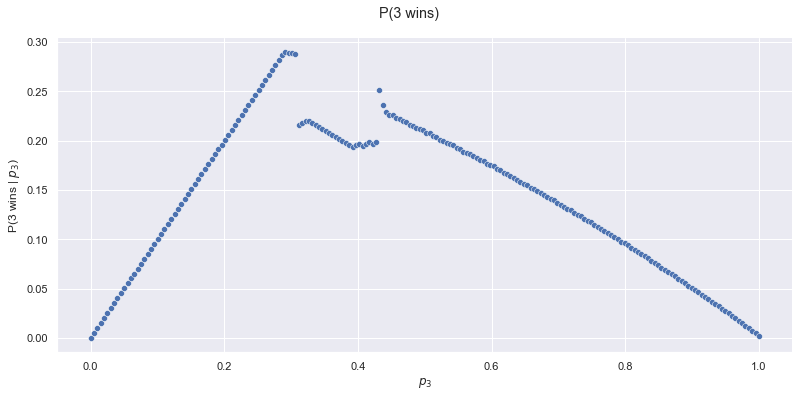

We can make a simulation to get an approximate overview of the problem:

In reality, the full range of is , an infinite amount of points. To simplify this, I chose 400 evenly distributed points on this continuous range (that was the max my computer could handle in a reasonable amount of time). That causes some small artifacts, some lines show some sort of jumping pattern, but we still get a good overview of the game if we ignore these artifacts for now.

Unfortunately, this brute force algorithm is too inefficient to solve the problem to 6 decimal digits of precision. In that case, we would have to choose at least points for all three variables. This algorithm has time complexity where n is the required precision. Definitely not quick enough for .

We can see that our optimal is somewhere around . That value of maximizes the probability of robot 3 winning. That will be our new goal, find the exact that corresponds to the kink at 0.28. Let’s redefine our boundaries on and zoom in:

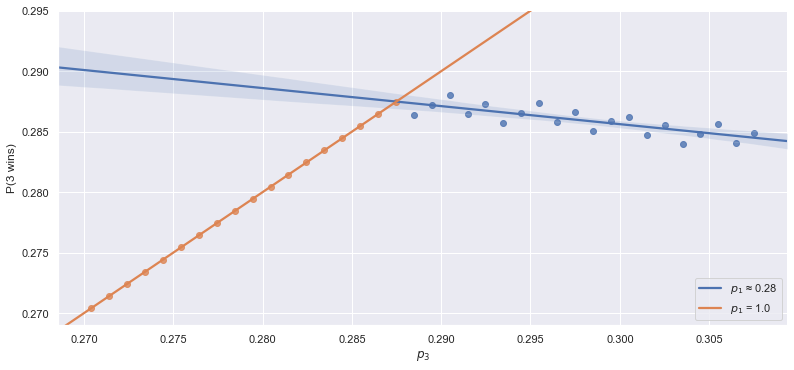

You can clearly see the rounding artifacts in this image. Notice I’ve added colours: orange corresponds to optimal solutions where . Consider the following data:

| P(2 wins) | |||

|---|---|---|---|

| 0.311602 | 1.000000 | 0.436090 | 0.285464 |

| 0.311165 | 1.000000 | 0.436090 | 0.286466 |

| 0.310728 | 1.000000 | 0.436090 | 0.287469 |

| 0.311596 | 0.288221 | 0.441103 | 0.288471 |

| 0.310984 | 0.288221 | 0.441103 | 0.289474 |

| 0.310372 | 0.288221 | 0.441103 | 0.290476 |

Notice the sudden flip of optimal when we increase too much. It is equal to 1.0 at first, but suddenly changes to ~0.28. The kink in the graph seems to correspond with the exact point where the optimal strategy for robot 1 switches from choosing (more on that later) to . It makes sense that this optimum point for robot #3 corresponds to a strategy switch of another player: at some point, robot #3 has made so large that it provides robot #1 the opportunity to “undercut” (aka choose a slightly bigger weight than robot #3) and increase his chance of winning.

Layers of argmax

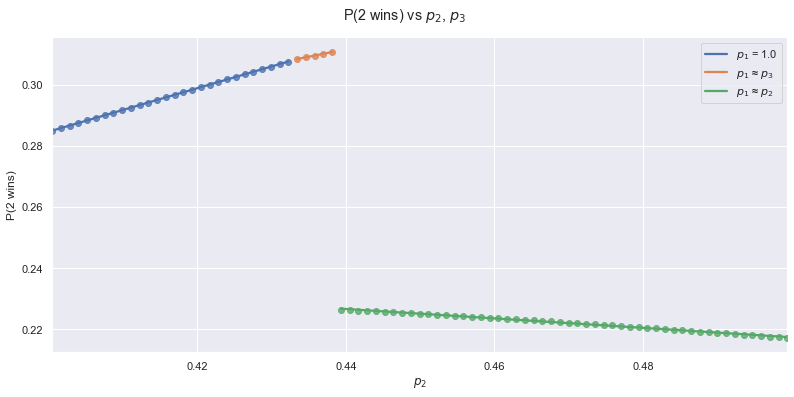

Let’s fix and look at the graph of versus the probability of robot 2 winning (use the slider to change !):

Notice that across the entire range, the optimal corresponds to the rightmost discontinuity. If we zoom in on this discontinuity, we find the following image:

This time, we have three different approximate values of : , or . Why these values? Consider the following graph, where we fix both and (use the sliders to change their values!):

Notice that no matter what values you choose via the sliders, you always have the same values for the discontinuities on the horizontal axis: , and . Furthermore, a maximum of this graph always corresponds to one of these discontinuities, as the function is increasing. This makes it easy to create a reaction function for robot #1: evaluate the chance of winning for the three possible values of and choose the one with the highest probability of winning. It’s easy to obtain this way, and, most importantly, we get the value of to an arbitrary amount of decimals. This reaction function of robot #1 will be called .

Back to robot #2: for a given , and now that we know how robot #1 will react to robot #2 and #3, how can we figure out the exact optimal value of ?

The first binary search

Let’s create the following indicator function:

It basically checks whether is the optimal value for , and that is equivalent to being to the right of the discontinuity we showed above (e.g. is element of the green dots?).

With this indicator function, we can do a binary search on . We keep track of a lower and an upper bound (0.27 and 0.29, for example), and we repeatedly check whether the middle of this interval is to the left or to the right of the discontinuity via the indicator function. If we are to the left of the discontinuity, we set the value of the new lower bound equal to the current middle. In the opposite case, we set the new value of the upper bound equal to the current middle. Because we reduce the size of the interval by half each iteration, we can compute to an arbitrary amount of decimal places in very little time. Each additional digit only takes iterations to compute. We can calculate to 20 decimal places in only 67 iterations! This algorithm provides us with the reaction function of robot #2: .

The second binary search

Back to the main question: what value of maximizes the probability of robot #3 winning?

We already found and . Therefore we have all we need to create a function that returns the maximum probability of 3 winning given some :

I used a trick to check whether is increasing or decreasing at some :

By incrementing by a small amount () and checking the influence of this change on f, we can define an indicator function that checks whether we’re to the left or right of the peak. We apply the same binary search algorithm as described above to this function to find the optimal value to six decimal places: .

Note that actually depends on a binary search, and we use it within the indicator function of another binary search. This makes the runtime of this algorithm where n is the precision in decimal digits. A time complexity I have never encountered before!

My code can be found here.

Analytic solution

I made a lot of assumptions and educated guesses during my initial solve of this problem. The fact that the maximum corresponded exactly to the kink at , for example. My solution was the result of a lot of trial and error, and a lot of simulations to test my hypotheses. It would be a lot more elegant to solve this problem analytically. The Bellman equation can be used to solve problems with optimal substructure, which this problem seems to belong to. I might revisit this problem in the future and try to solve it that way, as I have not figured out how that method exactly works yet!